Kinfu for Google Tango devkit

30 May 2016 | Programming

I have been playing with Google’s Project Tango devkit recently.

Although Tango (or at least the current tablet) is more oriented towards scanning house-scale areas, I have been experimenting with smaller scale scanning to get a 3D model of humans. Tango uses optical features and its IMU to determine camera orientation. Although this works quite well in most cases, it does suffer from drift and that’s a bit of a problem to get good precision point clouds.

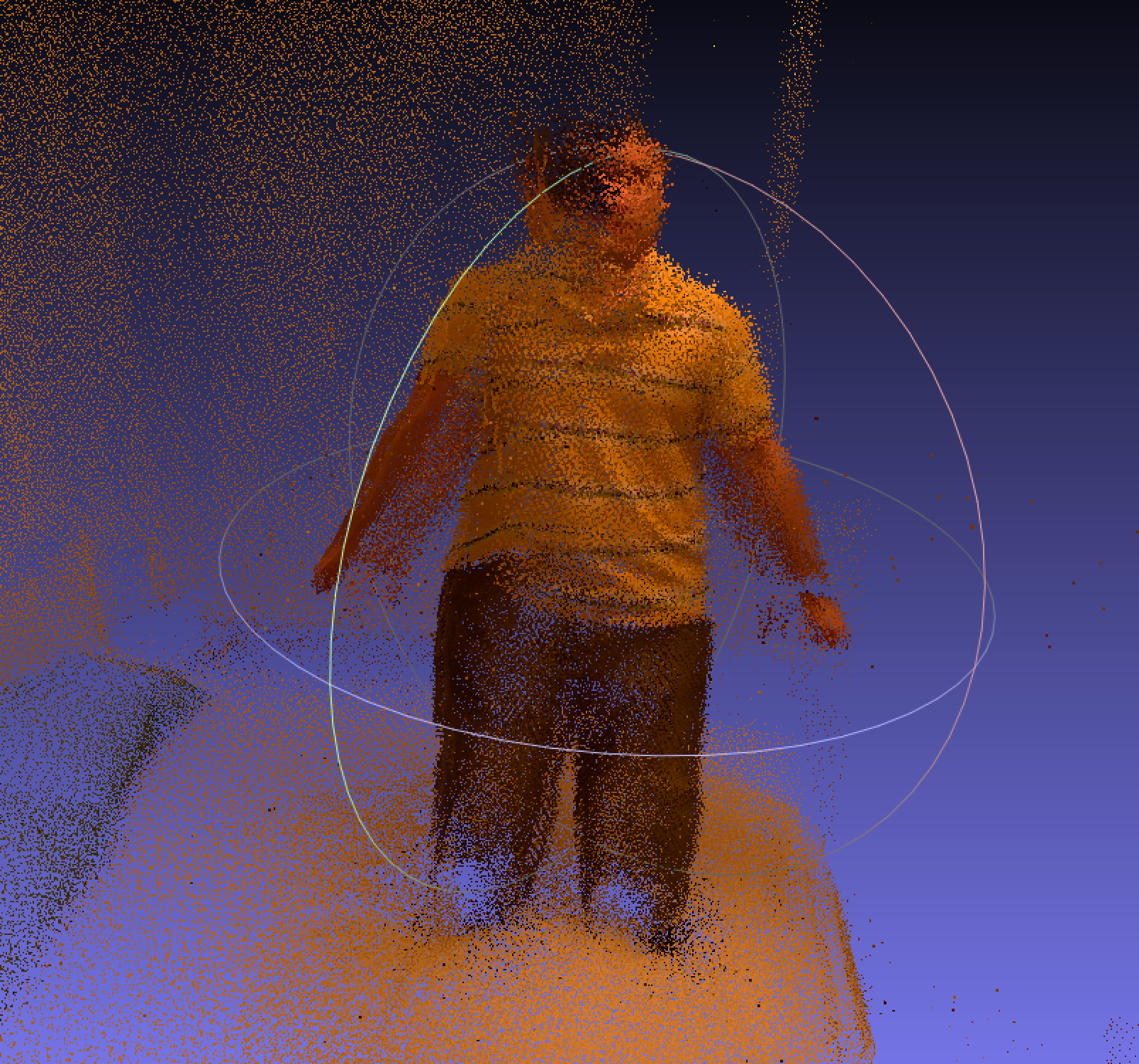

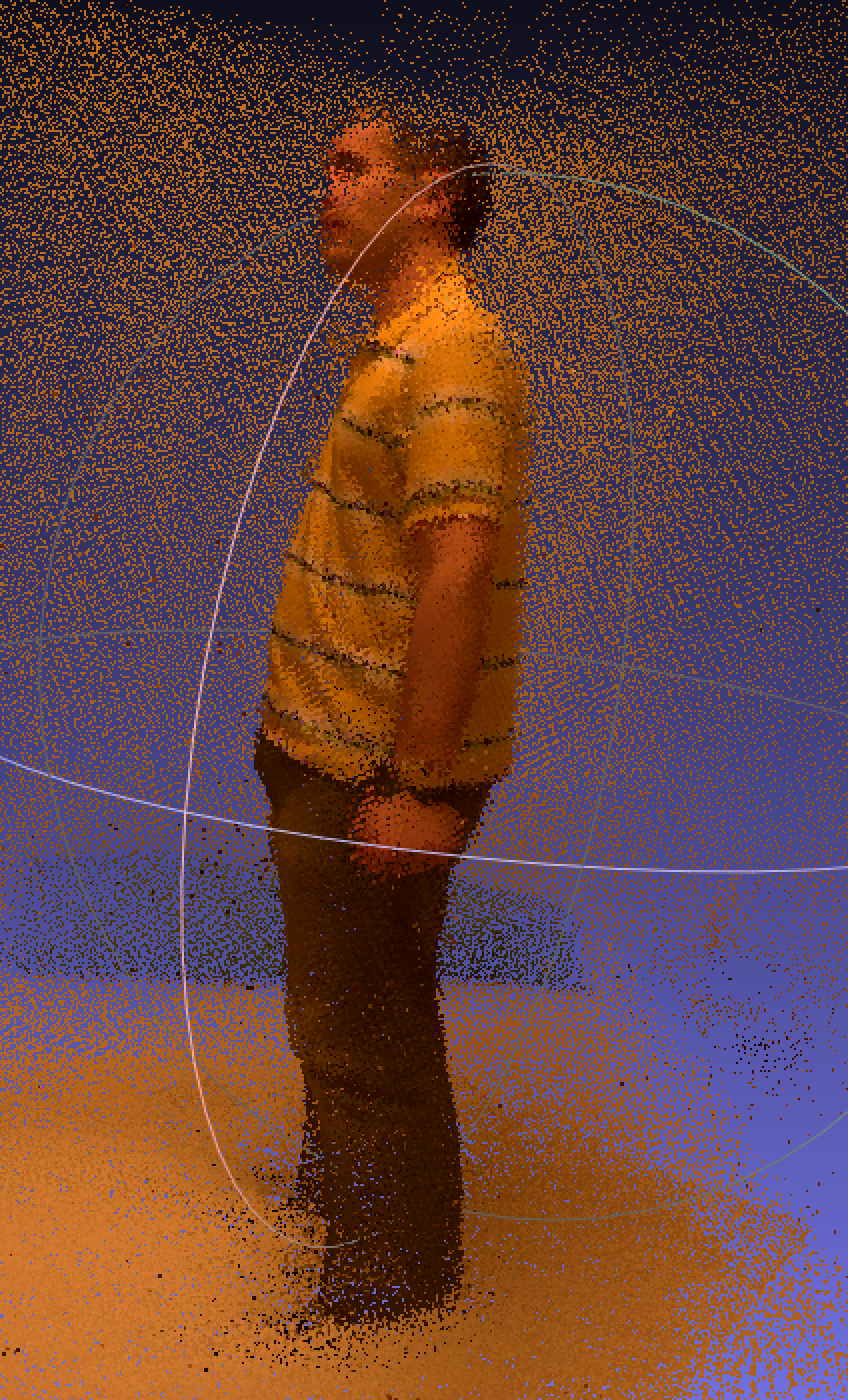

For example, that is the raw tango point cloud for a scan. You can clearly see the drift in the front view (left image).

To solve this, I implemented (inspired by the open source PCL implementation) the kinect fusion algorithm from Newcombe et al. It’s currently a Python/C/OpenCL implementation, so it’s not running on the tablet.

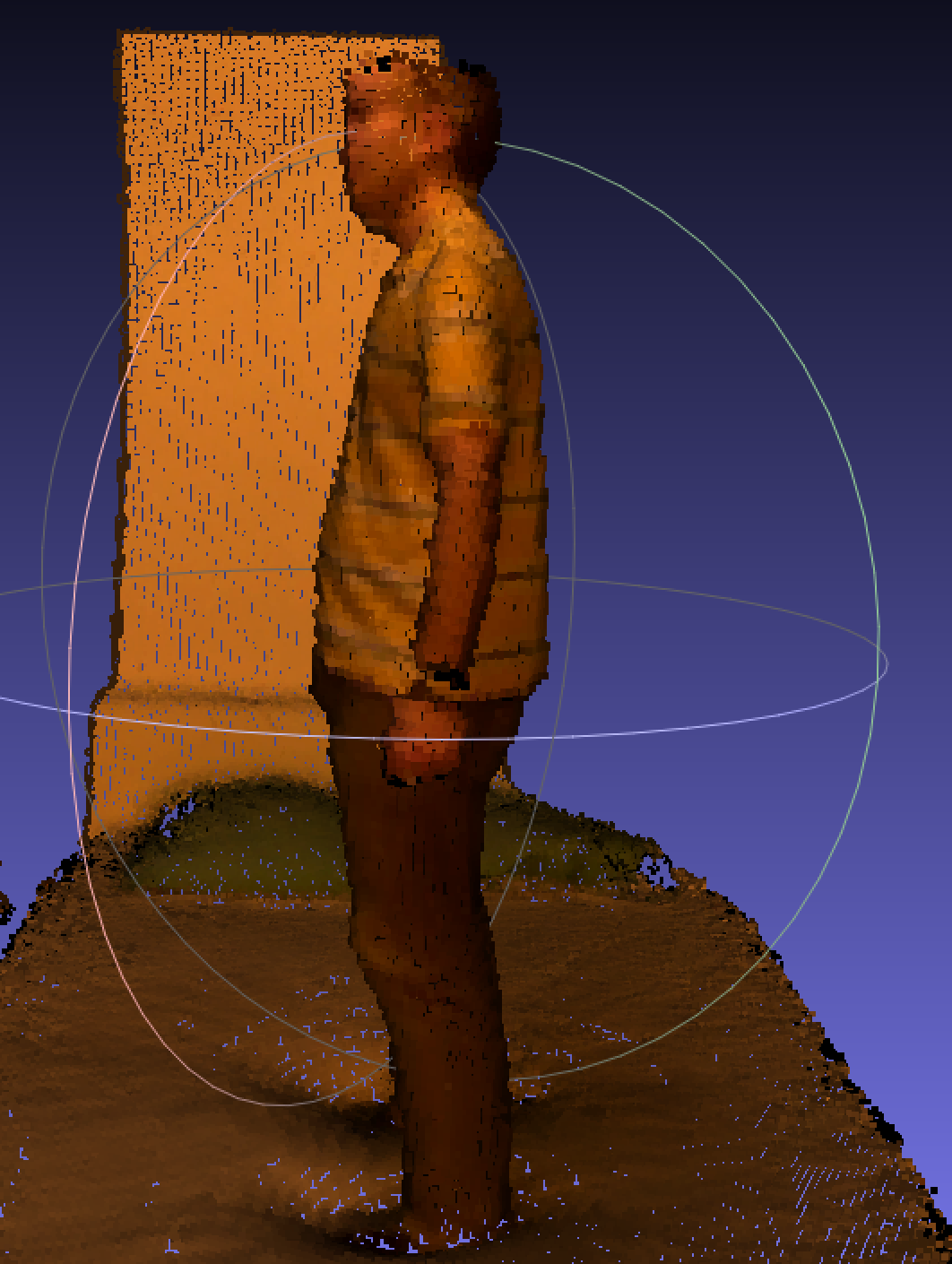

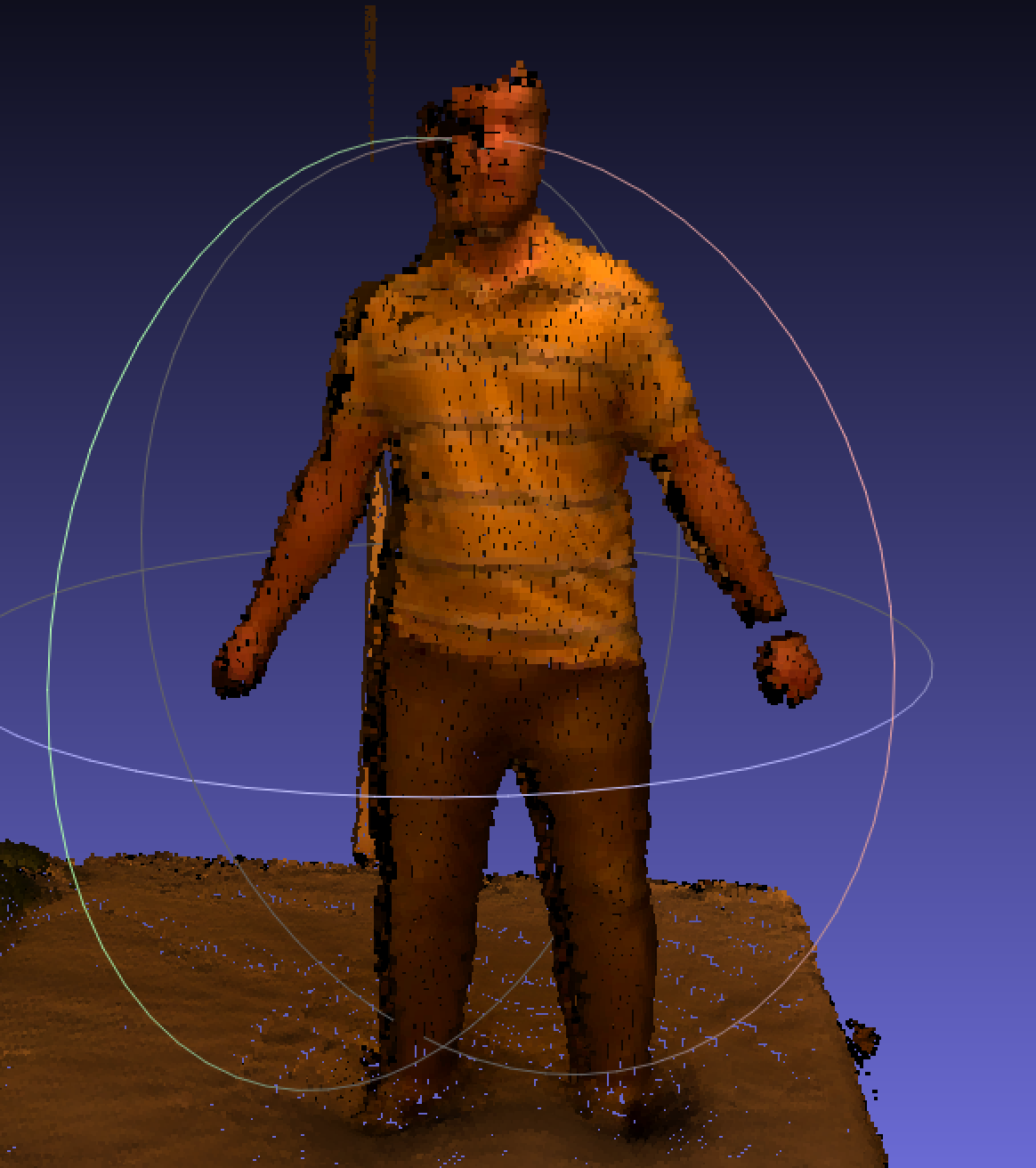

Here is the resulting point cloud after kinect fusion. As you can see, the drift has been mostly corrected, although there are still some problems on the arms.

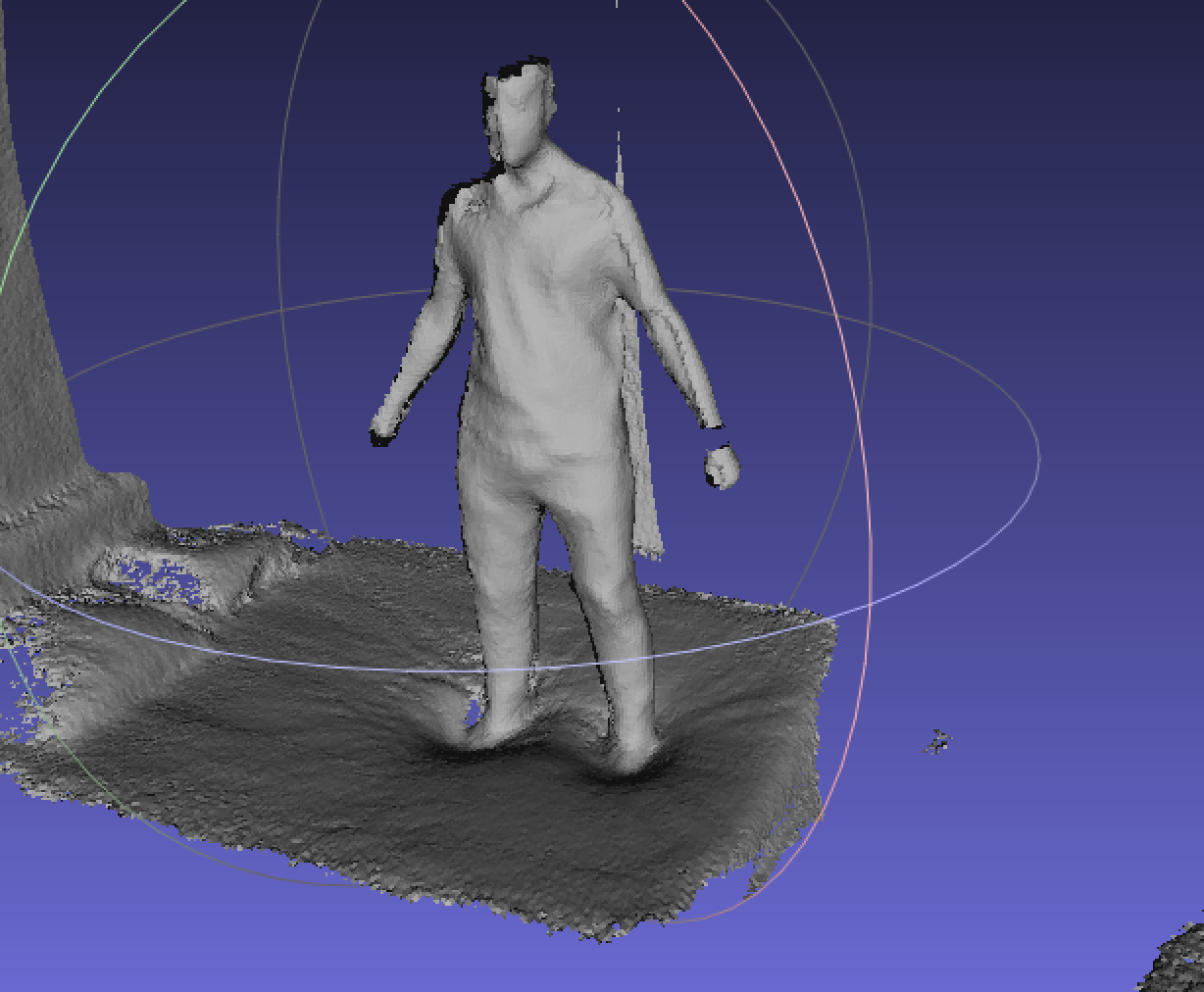

We can also mesh the kinect fusion volume :

If you’re interested in the inner workings, here is a video of log plots to verify everything is working correctly. You can see the input color map, the input depth map, the raycasted depth from the kinfu volume, the raycasted color and normal maps. I still have an issue with my ray skipping algorithm that creates some holes in the raycast, but this should be fixed soon.